Low-Resource Multilingual North Germanic NMT

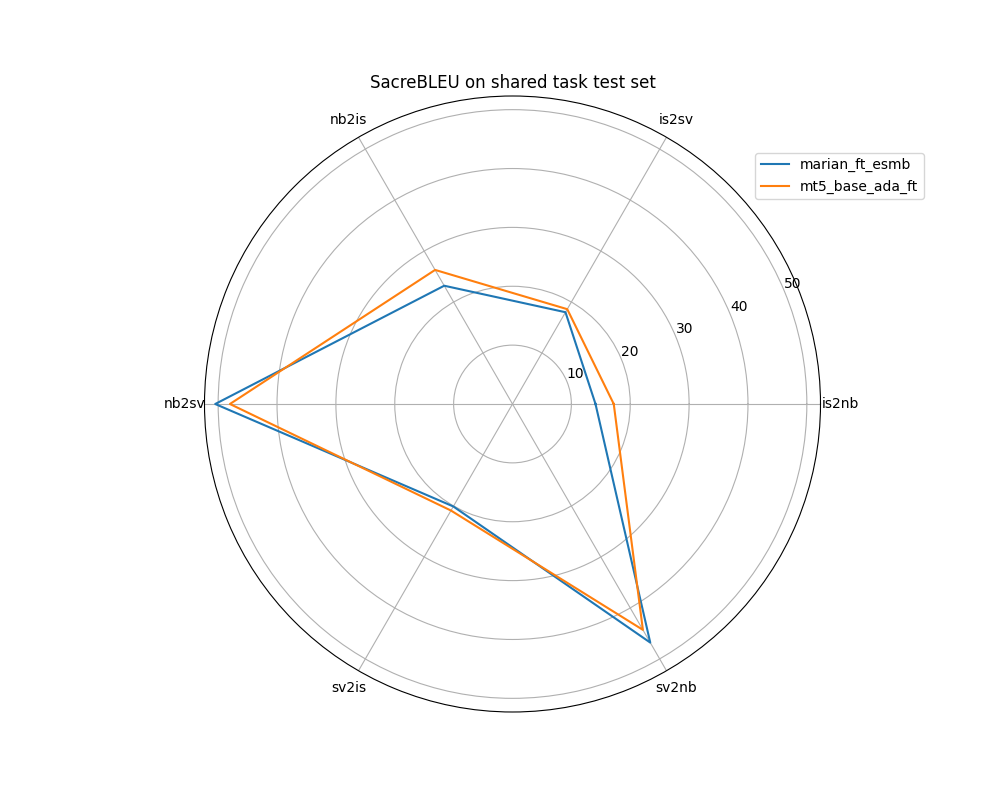

Our team placed first in the WMT2021 shared task in multilingual low-resource machine translation for North Germanic. The shared task asked participants to train models for translation to/from Icelandic (is), Norwegian-Bokmål (nb), and Swedish (sv). We followed a fairly typical process, though we did add a few specific small tricks in each step to boost our performance; smartly leveraging parallel and monolingual data, and employing pre-training, back-translation, fine-tuning, and ensembling, all helped our models to outperform the other submitted systems on most language directions.